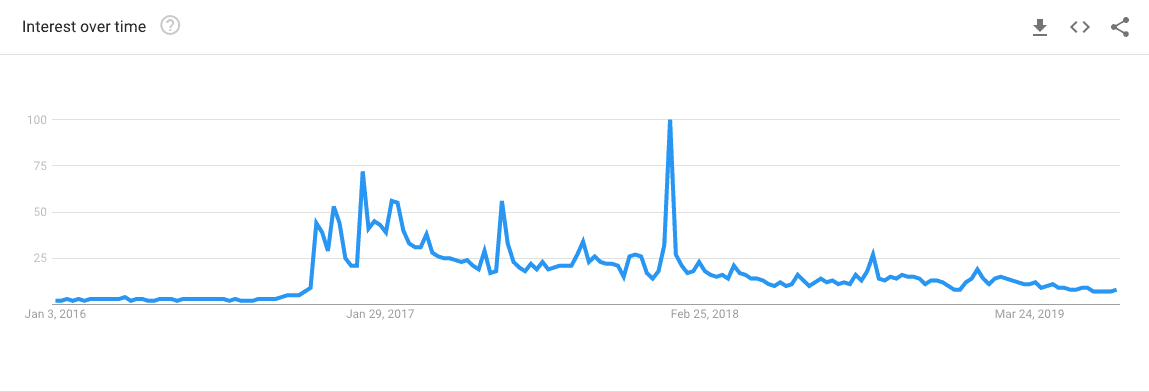

Fake news is a pretty funky problem. In fact, it is also a really recent problem. Look at the Google Trends screenshot below:

We were lucky enough to find a dataset on Kaggle that had fake news stories. However, we struggled to find a good dataset for real news, so we decided to create our own. We built a web scraper that grabbed from a variety of reliable sources: liberal, moderate, and conservative.

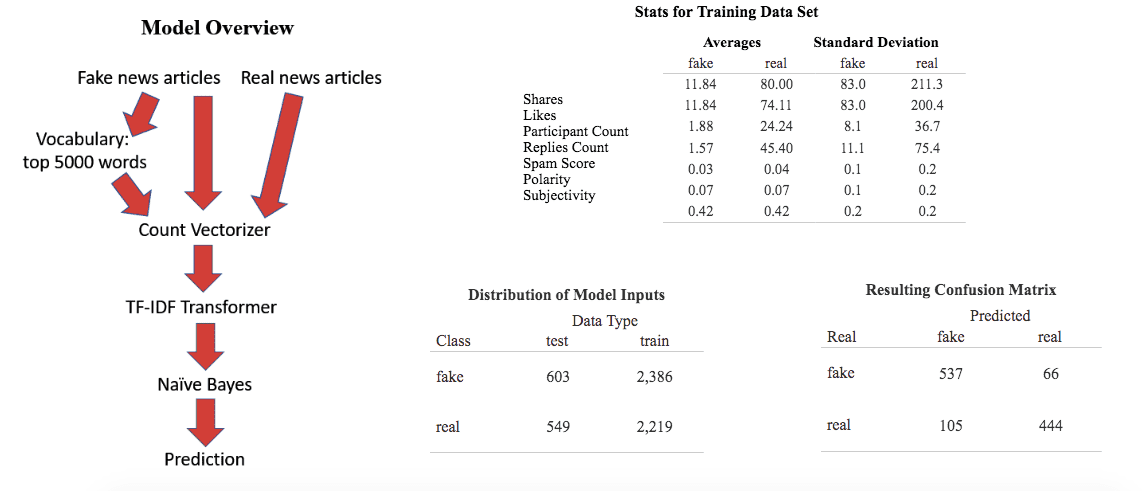

From there, we developed a Naive Bayes Classifier model that could predict whether an article was fake with 85% accuracy. The model was trained on a set of 4,000 articles and was tested on a set of 1,100 articles.

With a working model in hand, we set up a web demo with a really simple user flow:

-

Enter the URL of the page in question

-

We fetch the URL of the page

-

We parse the information on the page and feed it into the model

-

We return the result of the model as well as sentiment analysis and similarity between the headline and the article (one key indicator of fake news)

We ended up being the winner of the 2017 Seattle Global AI Hackathon. Check it out for yourself here and check out the GitHub repo here!